Kubernetes is a powerful system for automating the deployment and orchestration of containerized applications. Currently, it is the most popular container orchestration tool globally.

If you already use Kubernetes, you might know that it typically requires at least one master node and one worker node to run a Kubernetes. However, in this tutorial, I will demonstrate how Kubernetes can run on a single node—not Minikube, not K3s, not MicroK8s, but a full-blown Kubernetes setup on a single node.

Prerequisite

- Ubuntu 22.04

- Minimum CPU is 2 cores

- Minimum RAM is 2 GB

- Internet Connectivity

Sudo Privileges

Before starting, we make sure that we will have no permission issues during the installation & configuration process.

sudo su

Enable Kernel Module

Enable br_netfilter and overlay modules using this command:

modprobe overlay

modprobe br_netfilter

You can check whether the required module is already enabled or not

lsmod | egrep 'br_netfilter|overlay'

Add Kernel Configuration

Create a new file under sysctl.d to place the kernel configuration:

nano /etc/sysctl.d/kubernetes.conf

Add these lines to the file:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

Reload the kernel configuration

sysctl --system

Install Containerd

First, install the dependencies

apt update

apt install -y curl gnupg2 software-properties-common apt-transport-https ca-certificates

Add Docker Repo to apt

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add -

add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

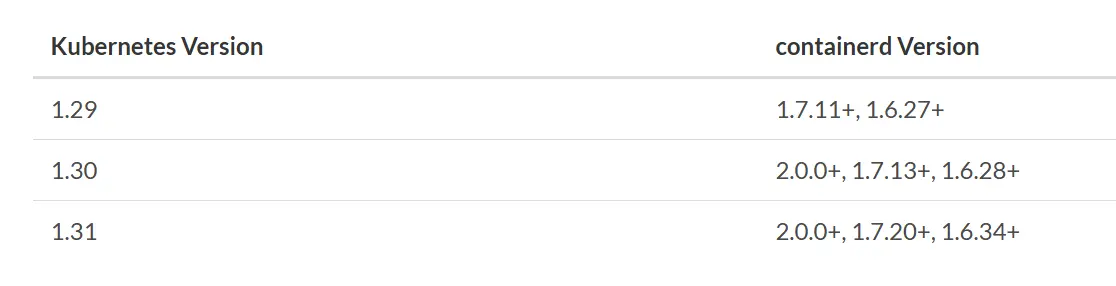

If we see the compatibility tables below, we know that to install k8s release 1.31.2, we need to install containerd version 1.7.20 or more. In this tutorial, we will install the latest containerd that is version 1.7.23.

Install the Containerd.

apt update

apt install containerd.io=1.7.23-1

Configure the Containerd using the default configuration

containerd config default > /etc/containerd/config.toml

In addition to that, configure the containerd to use the systemd cgroup driver in the configuration. First, open the file

nano /etc/containerd/config.toml

Find this config and change the SystemdCgroup from false to true like this below:

. . .

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

BinaryName = ""

CriuImagePath = ""

CriuPath = ""

CriuWorkPath = ""

IoGid = 0

IoUid = 0

NoNewKeyring = false

NoPivotRoot = false

Root = ""

ShimCgroup = ""

SystemdCgroup = true

. . .

After that, we can restart and enable the containerd

systemctl restart containerd

systemctl enable containerd

Install Kubeadm Kubectl Kubelet

First, install the dependencies

apt -y install curl vim git wget apt-transport-https gpg

Add Kubernetes repo to apt

mkdir -m 755 /etc/apt/keyrings

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.31/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.31/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

Install the kubelet kubeadm and kubectl

apt update

apt -y install kubelet=1.31.2-1.1 kubeadm=1.31.2-1.1 kubectl=1.31.2-1.1

apt-mark hold kubelet kubeadm kubectl

After the installation, we can check the kubectl and kubeadm versions and make sure that we install the 1.31.2 version.

kubectl version --client && kubeadm version

Enable the kubelet so it will activate on boot

systemctl enable kubelet

Kubernetes Cluster Initiation

First, we must set the k8s-endpoint DNS name to point to our localhost. Open the hosts file

nano /etc/hosts

Add this line

127.0.0.1 k8s-endpoint

Save and exit.

Pull all control node image

kubeadm config images pull

We can initiate the cluster by using kubeadm init and one option pod-network-cidr. Before you run this command, make sure that the pod-network-cidr subnet is not the same / intersects with your host network subnet. For example, if my host network is 192.168.1.0/24, try using 10.1.0.0/16 as a pod network.

kubeadm init --pod-network-cidr=10.1.0.0/16 --control-plane-endpoint k8s-endpoint:6443

Tips!

From my experience, It is recommended that we set the

--control-plane-endpointusing the DNS name when initializing the cluster. It will make our life easier when we want to scale the control plane from a single master to a multi-master cluster.If you want to scale the master node, you just need to change the

k8s-endpointto point to your Load Balancer IP by editing yourhostsfile. No need to break your existing Kubernetes cluster configuration.

If the initiation success you will get a message like this

Your Kubernetes control plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join k8s-endpoint:6443 --token 7sibm4.kcawyjy2sakmpgjv \

--discovery-token-ca-cert-hash sha256:c8ec1014a80209ba540c16451135b920b8ee82d717ae8df16d88b81564a321dc \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join k8s-endpoint:6443 --token 7sibm4.kcawyjy2sakmpgjv \

--discovery-token-ca-cert-hash sha256:c8ec1014a80209ba540c16451135b920b8ee82d717ae8df16d88b81564a321dc

Switch to a non-root user account by typing exit or just re-login using a non-root user, and execute this command:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

It is not recommended to run the kubectl using root

Install Cluster Network Calico

Note: Do this on the Master Node

For the network, as we mentioned earlier we are using Calico. To install Calico, first, we execute this command:

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.4/manifests/tigera-operator.yaml

After that, download the calico custom resources yaml file

wget https://raw.githubusercontent.com/projectcalico/calico/v3.26.4/manifests/custom-resources.yaml

Open the file

nano custom-resources.yaml

Find the cidr configuration, the default is 192.168.0.0/16, and change the IP matching the pod-network-cidr option when you initiate the cluster. For my case:

cidr: 10.1.0.0/16

After that, apply the custom resource object

kubectl apply -f custom-resources.yaml

This process will take some time until all of the pods are running, you can watch the process by executing this command:

kubectl get pod --all-namespaces --watch

Remove Taint

This step is the key to running Kubernetes on a single node. Execute this command to remove the default taint applied during the Kubernetes installation.

kubectl taint nodes $(hostname) node-role.kubernetes.io/control-plane:NoSchedule-

Checking Cluster

To check everything is installed correctly, we can print the cluster status:

kubectl get nodes

It will display the master and worker nodes with ready status:

NAME STATUS ROLES AGE VERSION

k8s-single-node Ready control-plane 14m v1.31.2

If the status shows NotReady, it may be necessary to wait a bit longer for the cluster to become Ready. Sometimes, there are pods still in the process of being created. To verify their status and ensure they are progressing without errors, run the following command.

kubectl get pod --all-namespaces

Congratulations, now you can install your own Kubernetes cluster in Ubuntu 22.04 using containerd and Calico in a single node.